Pattern Theory and Applications: mudanças entre as edições

Sem resumo de edição |

(home page update) |

||

| (31 revisões intermediárias pelo mesmo usuário não estão sendo mostradas) | |||

| Linha 1: | Linha 1: | ||

This is the main page of a graduate-level course in pattern theory, machine learning, pattern formation, pattern recognition and computer vision being taught in | This is the main page of a graduate-level course in pattern theory, machine learning, pattern formation, pattern recognition and computer vision being taught in 2015/2 at the Polytechnic Institute [http://pt.wikipedia.org/wiki/IPRJ IPRJ]/UERJ. It is generally useful for computer scientists, statisticians, and applied mathematicians wishing to automatically model and analyze phenomena from sets of images and other signals. Think of this course as a special 'flavor' of artificial intelligence which has been largely developed at one of the instructor's ''alma mater'', Brown University, through researchers such as fields medalist David Mumford and Ulf Grenader. | ||

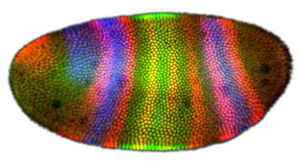

[[Image:Fruitflyembryo.jpg|right|300px]] | [[Image:Fruitflyembryo.jpg|right|300px]] | ||

* Link to the course pages for previous years: '''[[PT2013|2013]],[[PT2014|2014]]''' | |||

== General Info == | == General Info == | ||

* | * Instructors: prof. Francisco Duarte Moura Neto, Ph.D. Berkeley, and prof. [http://rfabbri.github.io Ricardo Fabbri], Ph.D. Brown University | ||

* Meeting times: | * Meeting times: Thursdays 3:10pm - 6:45pm, Room 210. | ||

* Forum for file exchange and discussion: [http://uerj.tk uerj.tk] | * Forum for file exchange and discussion: [http://uerj.tk uerj.tk] | ||

=== Course Format === | === Course Format === | ||

* Evaluation criteria: Final grade = projects (60%), class participation (20%) and reading summaries (20%) | * Evaluation criteria: Final grade = projects (60%), class participation (20%) and exercises/reading summaries (20%) | ||

* Each student will have a main project to develop throughout the semester | * Each student will have a main project to develop throughout the semester | ||

** There will be mid and final project presentations, each worth 50% of the project grade | ** There will be mid and final project presentations, each worth 50% of the project grade | ||

* There will be assigned reading almost every class (papers, book chapters, etc) | * There will be assigned lab exercises and reading almost every class (papers, book chapters, etc) | ||

** Readings must be summarized with '''personal opinions and reflexions''' and a summary must be typed and handed in | ** Readings must be summarized with '''personal opinions and reflexions''' and a summary must be typed and handed in | ||

** Discussion in class will be graded as "class participation" | ** Discussion and coding in class will be graded as "class participation" | ||

** '''Bring your laptops!''' | |||

=== Pre-requisites === | === Pre-requisites === | ||

* Undergraduate-level mathematics and probability (will review as needed) | * Undergraduate-level mathematics and probability (will review as needed) | ||

* Intermediate programming experience with any numerics scripting language such as Scilab, Python, R or Matlab. | |||

== Approximate Content == | == Approximate Content == | ||

| Linha 34: | Linha 38: | ||

=== Textbooks === | === Textbooks === | ||

* Main book: ''Pattern Theory: The Stochastic Analysis of Real-World Signals'', David Mumford and Agnes Desolneux (see [http://uerj.tk uerj.tk]) [[Image:Mumford-book.jpg]] | * Main book: ''Pattern Theory: The Stochastic Analysis of Real-World Signals'', David Mumford and Agnes Desolneux (see [http://uerj.tk uerj.tk]) [[Image:Mumford-book.jpg]] | ||

<video type="vimeo" id="65784108" width="552" height="470" allowfullscreen="true" desc="Pattern Theory Chapter 0 Screen Reading: [http://vimeo.com/65784108 vimeo.com/65784108]"/> | |||

<video type="youtube" id="-8H_v6njHmw" width="552" height="450" frame="true" allowfullscreen="true" desc="David Mumford's Lecture 1 at IMPA/Brazil - research topic similar to ch 5 of the book"/> | |||

<video type="youtube" id="IxLX4_z0_hg" width="552" height="450" frame="true" allowfullscreen="true" desc="David Mumford's Lecture 2 at IMPA/Brazil - research topic similar to ch 5 of the book"/> | |||

* ''Pattern Theory: From Representation to Inference'', Ulf Grenader | * ''Pattern Theory: From Representation to Inference'', Ulf Grenader | ||

* ''Structural Stability and Morphogenesis'', Rene Thom. We'll be complementing the course with ideas from this book, looking into this for investigating pattern formation | * ''Structural Stability and Morphogenesis'', Rene Thom. We'll be complementing the course with ideas from this book, looking into this for investigating pattern formation | ||

| Linha 41: | Linha 49: | ||

==== Partial listing & Tentative Outline ==== | ==== Partial listing & Tentative Outline ==== | ||

'''Part I Chapters 0 and 1 - pattern theory overview and intro to its basic methods through text processing''' | |||

# Overview of pattern theory and classic pattern recognition | # Overview of pattern theory and classic pattern recognition | ||

# The classical paradigm - machine learning, pattern recognition systems, clustering, recognition, and how it all fits together: the design of the ultimate AI system | |||

# Scilab and Matlab exercises - simulating everything with <tt>rand()</tt> | |||

# Reviewing probability theory guided by Ch 1's first exercises | |||

# Overview of the Bayesian approach to machine learning | |||

# Probabilistic models for text processing - unraveling Ch 1 sec. 0, part I | |||

# Probabilistic models for text processing - unraveling Ch 1 sec. 0, part II | |||

# Practical programming of Ch 1 sec. 0 (frequency tables and sampling of conditional probabilities for text synthesis), guided by Ch 1's Exercise section 5 (p. 55) | |||

# Markov Chains - main definitions and concepts of convergence | |||

# Mutual Information, Entropies, Kullback-Leibler distances | |||

# Word Boundaries Machine Translation | |||

# Practical programming of Ch 1 - DNA Sequence statistics, p. 56 ex 6 | |||

# [http://wiki.nosdigitais.teia.org.br/Imagem:Aula-pagerank.pdf Extra lecture on Markov Chains - Google PageRank and markov chains for organizing large networks and machine learning] | |||

'''Part II of Course: lets jump to Chapter 5: Flexible Templates''' | |||

# Overview of manifolds, differential geometry of surfaces and higher dimensions | |||

# Bird's eye view of Mumford's research on diffeomorphisms and infinite dimensional differential goemetry | |||

## See Mumford's presentations (on the right) | |||

## Infinite dimensional nonlinear manifolds and their applications to shape were already predicted by Riemann: ''"There are however manifolds in which the fixing of position requires not a finite number but either an infinite series or a continuous manifold of determinations of quantity. Such manifolds are constituted for example by ... the possible shapes of a figure in space, etc."'' | |||

== Misc. Notes == | |||

* Slides by Mauro de Amorim summarizing part I of the course [https://pt.sharelatex.com/project/54245152694cc3585873f383] | |||

== Homework == | == Homework == | ||

=== Assignment 1 === | |||

* All Exercises on Ch1, Simulating Discrete Random Variables with MATLAB (pp 51, 52, 53) | |||

* Type your solutions and hand in by midterm | |||

=== Assignment 2 === | |||

* Summarize Chapters 0, and chapter 1 sec 0. | |||

* Type in your summary and hand in by May 14 2013 | |||

=== Assignment 3 === | |||

* Exercise section 5 of chapter 1: Analyzing n-tuples in some data bases | |||

* No need to do anything with entropy right now, just do the practical stuff | |||

=== Fun code to look at === | |||

* experimental algorit being developed by Renato Fabbri for using basic word occurence statistics for text synthesis [http://labmacambira.git.sourceforge.net/git/gitweb.cgi?p=labmacambira/PLN;a=blob;f=ngramas.py;h=1fa6b86f5f315ffdbf487d5a1f43ecd3e11d6bb0;hb=HEAD] for his ongoing Introduction to Natual Language Processing course at [http://www.icmc.sc.usp.br ICMC-USP] | |||

== Keywords == | == Keywords == | ||

Edição atual tal como às 14h47min de 18 de agosto de 2020

This is the main page of a graduate-level course in pattern theory, machine learning, pattern formation, pattern recognition and computer vision being taught in 2015/2 at the Polytechnic Institute IPRJ/UERJ. It is generally useful for computer scientists, statisticians, and applied mathematicians wishing to automatically model and analyze phenomena from sets of images and other signals. Think of this course as a special 'flavor' of artificial intelligence which has been largely developed at one of the instructor's alma mater, Brown University, through researchers such as fields medalist David Mumford and Ulf Grenader.

General Info

- Instructors: prof. Francisco Duarte Moura Neto, Ph.D. Berkeley, and prof. Ricardo Fabbri, Ph.D. Brown University

- Meeting times: Thursdays 3:10pm - 6:45pm, Room 210.

- Forum for file exchange and discussion: uerj.tk

Course Format

- Evaluation criteria: Final grade = projects (60%), class participation (20%) and exercises/reading summaries (20%)

- Each student will have a main project to develop throughout the semester

- There will be mid and final project presentations, each worth 50% of the project grade

- There will be assigned lab exercises and reading almost every class (papers, book chapters, etc)

- Readings must be summarized with personal opinions and reflexions and a summary must be typed and handed in

- Discussion and coding in class will be graded as "class participation"

- Bring your laptops!

Pre-requisites

- Undergraduate-level mathematics and probability (will review as needed)

- Intermediate programming experience with any numerics scripting language such as Scilab, Python, R or Matlab.

Approximate Content

We will be reading sections of interest from Mumford's book together with complements from the others. Focus may shift based on research demand and demand from student's individual projects. We plan to focus on the following topics.

- Overview of Pattern Theory, Machine Learning, Pattern Recognition, Computer Vision and Image Understanding. Motivation. Basic concepts.

- Character Recognition and Syntactic Grouping. Image Understanding. (chapter 3)

- Image Texture, Image Segmentation and Gibbs Models (ch. 4)

- Faces and Flexible Templates (ch. 5): --> Focus of course <--

- Natural Scenes and their Multiscale Analysis (ch. 6)

- Catastrophe Theory - readings from Rene Thom's book. Qualitative pattern theory?

Main Resources

Textbooks

- Main book: Pattern Theory: The Stochastic Analysis of Real-World Signals, David Mumford and Agnes Desolneux (see uerj.tk)

<video type="vimeo" id="65784108" width="552" height="470" allowfullscreen="true" desc="Pattern Theory Chapter 0 Screen Reading: vimeo.com/65784108"/> <video type="youtube" id="-8H_v6njHmw" width="552" height="450" frame="true" allowfullscreen="true" desc="David Mumford's Lecture 1 at IMPA/Brazil - research topic similar to ch 5 of the book"/> <video type="youtube" id="IxLX4_z0_hg" width="552" height="450" frame="true" allowfullscreen="true" desc="David Mumford's Lecture 2 at IMPA/Brazil - research topic similar to ch 5 of the book"/>

- Pattern Theory: From Representation to Inference, Ulf Grenader

- Structural Stability and Morphogenesis, Rene Thom. We'll be complementing the course with ideas from this book, looking into this for investigating pattern formation

Lectures

Partial listing & Tentative Outline

Part I Chapters 0 and 1 - pattern theory overview and intro to its basic methods through text processing

- Overview of pattern theory and classic pattern recognition

- The classical paradigm - machine learning, pattern recognition systems, clustering, recognition, and how it all fits together: the design of the ultimate AI system

- Scilab and Matlab exercises - simulating everything with rand()

- Reviewing probability theory guided by Ch 1's first exercises

- Overview of the Bayesian approach to machine learning

- Probabilistic models for text processing - unraveling Ch 1 sec. 0, part I

- Probabilistic models for text processing - unraveling Ch 1 sec. 0, part II

- Practical programming of Ch 1 sec. 0 (frequency tables and sampling of conditional probabilities for text synthesis), guided by Ch 1's Exercise section 5 (p. 55)

- Markov Chains - main definitions and concepts of convergence

- Mutual Information, Entropies, Kullback-Leibler distances

- Word Boundaries Machine Translation

- Practical programming of Ch 1 - DNA Sequence statistics, p. 56 ex 6

- Extra lecture on Markov Chains - Google PageRank and markov chains for organizing large networks and machine learning

Part II of Course: lets jump to Chapter 5: Flexible Templates

- Overview of manifolds, differential geometry of surfaces and higher dimensions

- Bird's eye view of Mumford's research on diffeomorphisms and infinite dimensional differential goemetry

- See Mumford's presentations (on the right)

- Infinite dimensional nonlinear manifolds and their applications to shape were already predicted by Riemann: "There are however manifolds in which the fixing of position requires not a finite number but either an infinite series or a continuous manifold of determinations of quantity. Such manifolds are constituted for example by ... the possible shapes of a figure in space, etc."

Misc. Notes

- Slides by Mauro de Amorim summarizing part I of the course [1]

Homework

Assignment 1

- All Exercises on Ch1, Simulating Discrete Random Variables with MATLAB (pp 51, 52, 53)

- Type your solutions and hand in by midterm

Assignment 2

- Summarize Chapters 0, and chapter 1 sec 0.

- Type in your summary and hand in by May 14 2013

Assignment 3

- Exercise section 5 of chapter 1: Analyzing n-tuples in some data bases

- No need to do anything with entropy right now, just do the practical stuff

Fun code to look at

- experimental algorit being developed by Renato Fabbri for using basic word occurence statistics for text synthesis [2] for his ongoing Introduction to Natual Language Processing course at ICMC-USP

Keywords

Portuguese: Teoria dos Padrões, Reconhecimento de Padrões, Visão Computacional, Inteligência Artificial, Formação de Padrões