FooBarBaz: mudanças entre as edições

(Nova página: FooBarBaz é experimento em livecoding. Apresentado no Festival Contato 2011. Mais sobre Livecoding. == Códigos == Para os códigos usados no projeto, veja AudioArt. == V...) |

Sem resumo de edição |

||

| (2 revisões intermediárias por 2 usuários não estão sendo mostradas) | |||

| Linha 1: | Linha 1: | ||

FooBarBaz é experimento em livecoding. Apresentado no Festival Contato 2011. | FooBarBaz é experimento em livecoding. Apresentado no Festival Contato 2011. | ||

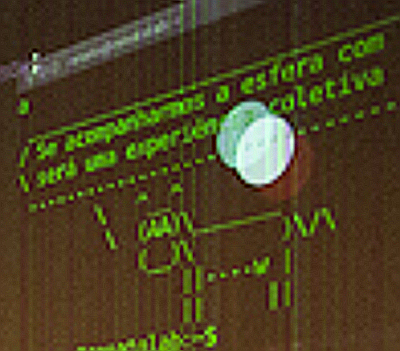

[[Image:Foobarbaz6.jpg]] | |||

[[Image:Foobarbaz1.jpg]] | |||

[[Image:Foobarbaz2.jpg]] | |||

[[Image:Foobarbaz3.jpg]] | |||

[[Image:Foobarbaz4.jpg]] | |||

[[Image:Foobarbaz5.jpg]] | |||

[[Image:Foobarbaz7.jpg]] | |||

ChucK, Vim, Emacs, Puredata, Cowsay. | |||

Mais sobre [[Livecoding]]. | Mais sobre [[Livecoding]]. | ||

Renato Fabbri | Ricardo Fabbri | Vilson Vieira | Gilson Beck | |||

== Códigos == | == Códigos == | ||

Para os códigos usados | Para os códigos usados, veja [[AudioArt]]. | ||

== Vídeos == | == Vídeos == | ||

| Linha 71: | Linha 84: | ||

Renato | Renato | ||

Renato Fabbri | Renato Fabbri | ||

---- | |||

02/12/11 para listamacambira, ChucK | 02/12/11 para listamacambira, ChucK | ||

| Linha 115: | Linha 130: | ||

cheers, | cheers, | ||

rfabbri | rfabbri | ||

Vilson Vieira | |||

02/12/11 | ---- | ||

para Renato, ChucK, listamacambira | |||

Vilson Vieira 02/12/11 para Renato, ChucK, listamacambira | |||

Hey Kassen and other Chuckists! | Hey Kassen and other Chuckists! | ||

I think it is interesting to note we used an alternative approach considering the sync between Renato and me. The sound was generated by Renato using ChucK/Vim/Jack and by me using ChucK/Emacs/Jack without sync. The audio from both of us was passed to a Pd patch running on a third computer operated by Gilson Beck, another composer, part of the trio (FooBarBaz). Gilson spatialized and mixed the audio generated by us with a visual interface: the movements of his hands were tracked by a "color tracker" implemented by Ricardo Fabbri on Pd/GEM and the x/y coordinates defined the panning effects. On this way we could mix both audio in certain times, creating a dialogue between my sound, Renato's sound and Gilson's. | I think it is interesting to note we used an alternative approach considering the sync between Renato and me. The sound was generated by Renato using ChucK/Vim/Jack and by me using ChucK/Emacs/Jack without sync. The audio from both of us was passed to a Pd patch running on a third computer operated by Gilson Beck, another composer, part of the trio (FooBarBaz). Gilson spatialized and mixed the audio generated by us with a visual interface: the movements of his hands were tracked by a "color tracker" implemented by Ricardo Fabbri on Pd/GEM and the x/y coordinates defined the panning effects. On this way we could mix both audio in certain times, creating a dialogue between my sound, Renato's sound and Gilson's. | ||

| Linha 123: | Linha 140: | ||

I think Gilson can send you more details about his Pd patch and some videos about the human body interface tracked by colors. | I think Gilson can send you more details about his Pd patch and some videos about the human body interface tracked by colors. | ||

All the best. | All the best. | ||

Edição atual tal como às 16h02min de 5 de junho de 2012

FooBarBaz é experimento em livecoding. Apresentado no Festival Contato 2011.

ChucK, Vim, Emacs, Puredata, Cowsay.

Mais sobre Livecoding.

Renato Fabbri | Ricardo Fabbri | Vilson Vieira | Gilson Beck

Códigos

Para os códigos usados, veja AudioArt.

Vídeos

- live coding presentation, part 1 a basic principles of the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil.

- live coding presentation part1 b: REM and cows about Rapid Eyes Movement (REM) and use of cows in the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil.

- live coding presentation part2 improvisation improvisation part of the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil.

- presentation part3 soundscapes The part where we used soundscapes in the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil.

- presentation part4 improvisation2 ending endind of the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil.

Relembrando o experimento

Renato Fabbri por lists.cs.princeton.edu 28/11/11 para ChucK

This is what I used and it was quite enough given the execussion had another live coder and a PD and mixer improviser:

http://ubuntuone.com/7P9ZFMFVVa9cBr4LZ1xtjg

My replace map doesnt work though (last line of the text file on the link). Any idea? BTW, we live coded for more de 2 thousand people here in Brasil at Festival Contato. Some say about ~5 thousand, i guess ~3,5k. Chuck live-coding with Vim rvl3z. Vilson Vieira, the other live-coder, used Emacs. We projected both desktops at the same time.

cheers!,

Renato

Renato Fabbri 30/11/11 para ChucK

well, i wanted to do any documentation of what i did, so here it goes as it came. No sound, just a visual screenshot (perfect for reading as you hear some music of your preference :P) I did not see how it is and cant look that now. The mpeg files are running ok here, i am using mplayer in linux. But they did not run in Kaffeine and another player (dont reacall its name).

presentation-part1.mpeg http://ubuntuone.com/0w8vde6POCJdUhAfDRCB0G

presentation-part1-REM-and-cows.mpeg http://ubuntuone.com/2biXjEGbLARAG9gyJf8MmL

presentation-part2-improvisation.mpeg http://ubuntuone.com/2l6W8HhAEcw5DTcuLxP2wn

presentation-part3-soundscapes.mpeg http://ubuntuone.com/6UXsfV59e7AnvOtAJjWKAO

presentation-part4-improvisation2-ending.mpeg (uploading) http://ubuntuone.com/55te5BtDx7Fb9DdkPlezLV

Dont know if they are uploaded right, i should put them on Vimeo. I would like to have my partners screens, but he had a problem with his lap. Vilson, where is the code u used to play with? all the best and cheers,

Renato Renato Fabbri

02/12/11 para listamacambira, ChucK

> I like how you make a glorious mess instead of the stark minimalism of > the other livecoding I've seen. I'm not sure how this would scale, but > the difference is exciting.

Thanks! I like that also. The idea is to use the desktop to play and make it more appealing. That bouncing white ball is 'processing'. The cow is 'cowsay'. Some years ago i did what i now call LDP (Linux Desktop Playing) with jack-rack, ardour, audacity, PD, chuck, python and even audacious. That was a really big mess, specially with ABT:

http://trac.assembla.com/audioexperiments/browser/ABeatDetector

Maybe what we are doing is live coding with heritances from LDP.

Anyway, these are the 5 small videos at Vimeo, so anyone can take a look: - live coding presentation, part 1 a basic principles of the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil. http://vimeo.com/33012735 - live coding presentation part1 b: REM and cows about Rapid Eyes Movement (REM) and use of cows in the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil. http://vimeo.com/33018740 - live coding presentation part2 improvisation improvisation part of the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil. http://vimeo.com/33019291 - presentation part3 soundscapes The part where we used soundscapes in the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil. http://vimeo.com/33025717 - presentation part4 improvisation2 ending endind of the live coding presentation we did on 20/11/2011 for about 3,5 thousand people on Festival CONTATO, São Carlos, Brazil. http://vimeo.com/33025913 cheers, rfabbri

Vilson Vieira 02/12/11 para Renato, ChucK, listamacambira

Hey Kassen and other Chuckists! I think it is interesting to note we used an alternative approach considering the sync between Renato and me. The sound was generated by Renato using ChucK/Vim/Jack and by me using ChucK/Emacs/Jack without sync. The audio from both of us was passed to a Pd patch running on a third computer operated by Gilson Beck, another composer, part of the trio (FooBarBaz). Gilson spatialized and mixed the audio generated by us with a visual interface: the movements of his hands were tracked by a "color tracker" implemented by Ricardo Fabbri on Pd/GEM and the x/y coordinates defined the panning effects. On this way we could mix both audio in certain times, creating a dialogue between my sound, Renato's sound and Gilson's. Unfortunatelly I lost my laptop and all the codes within after the presentation, but I used a screen similar to Renato's recorded screencasts, using ChucK as a live sampler, similar to Thor's ixilang approach. A snippet of the code was saved here: https://gist.github.com/1379142 I think Gilson can send you more details about his Pd patch and some videos about the human body interface tracked by colors. All the best.